Assumptions

- KV Store

- Small object (< 1MB)

- Week consistency

Consideration

- Always writeable

- Conflict resolution during read

- Application can implement its own merge policy

- Or use simple policy the data store provides (last write win)

Architecture

Interface

get(key) -> (Array[object], context)- 1 object or many conflicting objects

put(key, context, object)- context: vector clock and other metadata

Partitioning

- Each node hash to a location on a ring

- Data hash to another location on ring

- rotate clockwise, find nearest node

- Improvement: Each node hash to many virtual node on the ring

Replication

- Replicates at N-1 clockwise successor nodes

- preference list - The list of nodes that is responsible for storing a particular key

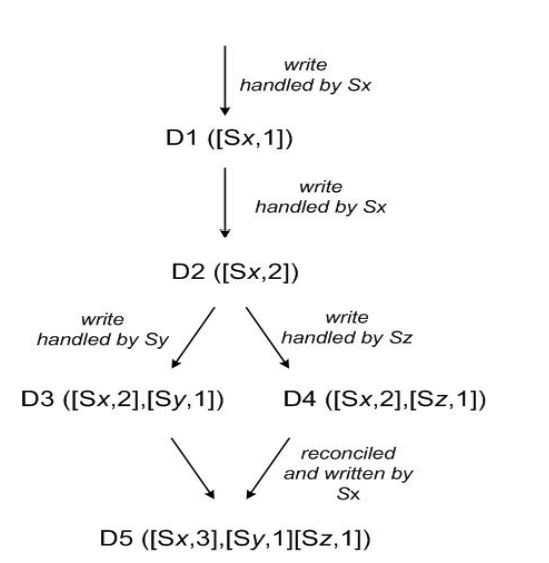

Versioning

- Vector clock compression

- Remove oldest clock when exceeds threshold

get()/put()

- Set $R$, $W$

- Write to a coordinator (nearest or load balancer)

- coordinator send to top $N$ in preference list

- wait for $W-1$ replies ($W$ in total)

- return

- Read from $N$ nodes, wait for $R$ responses

Failures

- When a server is down

- Send data to next healthy note with a hint

- When backup send data back to previously failed node

Membership change

- Require manual actions

- Each node contacts a peer at random every second to sync membership/partition changes

- Detect failure locally

???

To account for node failures, preference list contains more than N nodes

External Discovery